Self-serving thought experiments

Debunking the aspirational dystopias of artificial general intelligence and the trolley problem

A few years ago my collaborator Julian de Freitas and I published the first of what became a series of papers about the problems with applying the thought experiment known as “the trolley problem” to autonomous cars. The trolley problem, to briefly recap, was originally designed as a moral philosophy hypothetical which forces people to choose between two unpalatable options: faced with an out of control trolley, do you let it continue on its current path and run over a collection of people tied for some reason to the train tracks, or do you divert it to a branch line which features only a single person in harm's way. Asking people to answer to this conundrum can elicit consideration of the moral complexities of weighing the good of the many over the good of the few, the complexities of questions of agency, and the moral weight of intentional harm versus harm passively accepted. It's fun to think and talk about, and produced a good amount of genuinely productive work in moral philosophy.

People love to apply the trolley problem to autonomous cars. It seems like a good fit, if you just substitute some words. Instead of a trolley, there's an autonomous car. Instead of people tied to the train tracks, you have pedestrians minding their own business. Instead of a human who can divert the trolley you have the car itself, deciding who lives and who dies. The idea of an autonomous car facing live-or-death moral decision-making captured people’s imaginations, and accumulated a lot of admiring press. It seemed like an excitingly futuristic question to ask about an excitingly futuristic technology. Julian and I argued that the application of the trolley problem to autonomous cars is almost always inappropriate. The biggest problem is that thinking about situations where the vehicle has no good options is precisely the wrong mindset for thinking about safety in autonomous cars. The problem you should be trying to solve—and which, happily, most manufacturers are trying to solve—is how you avoid situations with no good outcome in the first place. Think about the chain of events that would be necessary for an autonomous car to find itself with no option but to injure a pedestrian. Why couldn't the vehicle just stop? Why is it going so fast? Wouldn't more conservative motion planning largely eliminate the already-remote chance of a trolley problem-type scenario?

There's another issue with the trolley problem when applied to autonomous cars. The precondition for a moral dilemma like the trolley problem to apply is that the car has to know precisely what would happen. That is, the autonomous car would need to know the location of every component of the environment around them, including pedestrians. It would also have to know how grievously they would be injured. It would have to know precisely where they would be some distance into the future. And in many of the proposed autonomous vehicle thought experiments, it would have to know information like whether one pedestrian was a child or another pedestrian was drunk. Not to put too fine a point on it, for the designers of actual autonomous cars today, this would be an extremely good problem to have. All of the preconditions I listed define incredibly hard problems of computer sensing. For an autonomous car to have 100% confidence that it knew the locations and identities of every relevant object in the environment would be a substantial and impressive advance over the current state of the art.

In other words, the popularization of the trolley problem as an issue of concern for autonomous cars actually has the effect of making autonomous cars seem much, much more advanced than they actually are. It leads the public to believe that all of the humdrum everyday problems of seeing and understanding the environment have been solved, and that now the issue is how to manage the thorny philosophical implications unlocked by the superhuman abilities of these robots. If you are operating a self-driving car company, and wish to claim, against

the available evidence, that your vehicles are making people safer, it is very helpful to you if it is a commonplace that the issues you are really struggling with are godlike questions of life and death, instead of rather more prosaic engineering questions like whether pedestrians blink out of existence when they step behind a light pole.

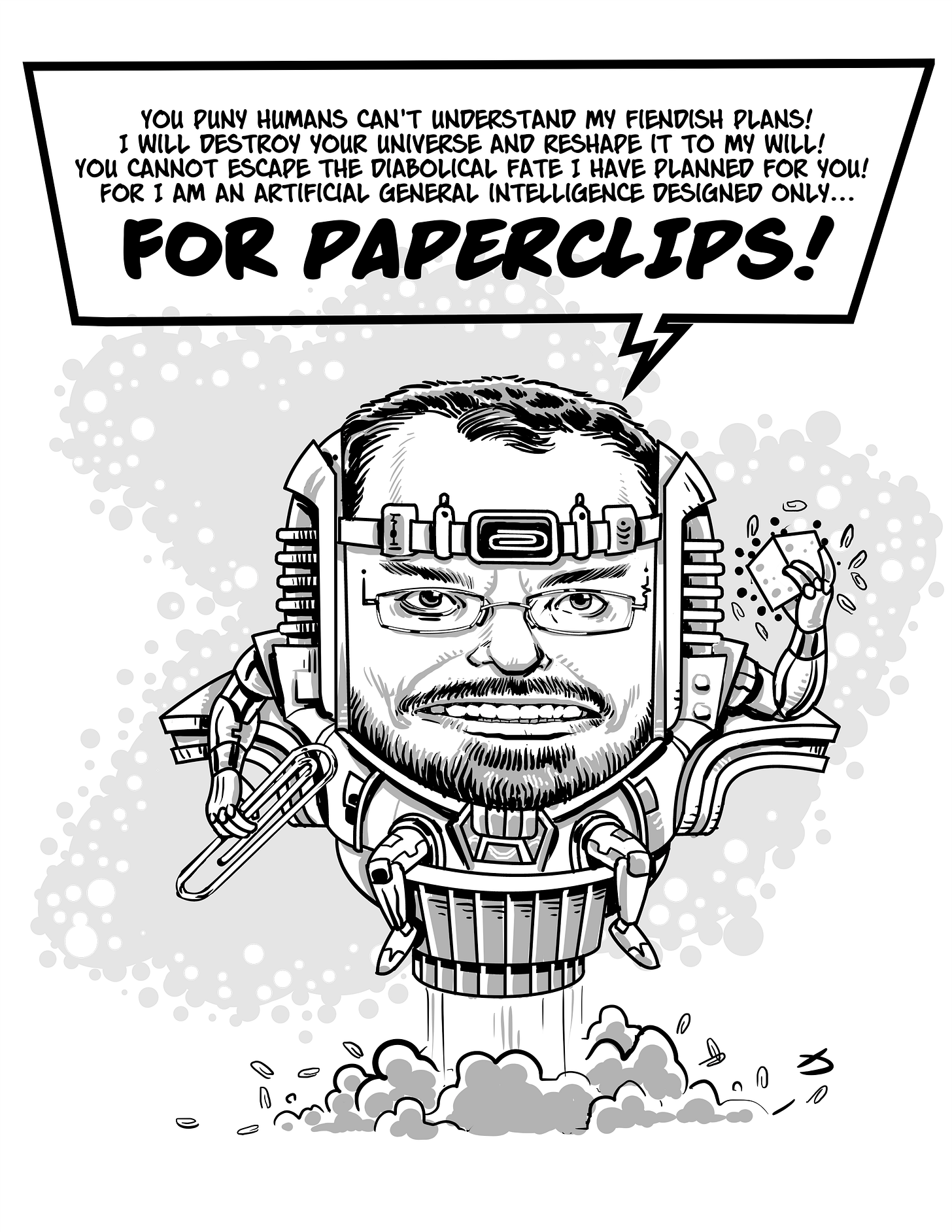

Which brings us to the letter recently written by Sam Altman, venture investor and CEO of OpenAI, about how they are preparing—ethically and technically—for the arrival of "AGI", or "Artificial General Intelligence". "AGI" has been a buzzword in certain circles for a while: the effective altruists that Sam Bankman-Fried was supporting with allegedly purloined customer funds from FTX are positively obsessed with it. It suffers from some of the same definitional problems as "AI" does. Approximately, people tend to describe it as a computer program that is as intelligent as—or more intelligent than—a human across the entire spectrum of cognitive tasks which humans can accomplish. Not just putting together coherent sentences or playing chess, but also social reasoning, intuitive physics, everything.

That would be a remarkable achievement. It is also not an achievement that follows in any particularly direct way from the current state of progress on large language models. LLMs—as I've discussed and as is clearly laid out in Emily Bender and Timnit Gebru et al's "Stochastic Parrots" work—are incredibly good at producing the modal linguistic response to an input. These models are trained on enormous corpora of input and produce outputs of remarkable fluency, if iffy and unknowable reliability.

But a brain is not a language-generating engine. The psychologist J.J. Gibson, who I mentioned last time, talked often about how the correct first step to understanding perception was to understand what perception was for. Why is it that we are able to see, what evolutionary purpose does it solve? Only by starting with that question can you develop the correct intuition for how vision is likely to work. The same is true for both language and intelligence. Humans didn't evolve the capacity to produce language, and the big brains capable of such astounding feats of reasoning, in a vacuum. Nor did those facilities evolve as disembodied reactions to the presence of an nigh-infinite collection of deep linguistic training data.

Human brains evolved in an environment where (it was probably the case that) rich cooperation was increasingly necessary to procure the resources to thrive and reproduce. Michael Tomasello has argued convincingly and at length that the new kinds of social cooperation human ancestors were able to develop were driven by and causal of the linguistic and cognitive abilities we now think of as both general-purpose and central to our functioning.

One of the the things this means, if we follow J.J. Gibson (which not everybody does: I should probably give you fair warning that I am venturing more than usually far past what you might consider the scientific mainstream consensus right now) is that language (for instance) evolved to solve a particular set of human problems. Our distant ancestors wanted to eat and reproduce in an environment (the plains of Africa) where the mental tools that had allowed them to succeed as arboreal hunters were insufficient, and where the dividends paid by being able to work constructively and consistently with other, unrelated members of the species—something other apes can't really accomplish—were enormous.

All of this discussion of socialization on African plains in distant evolutionary history is a bit vague. You can be more specific about it. The big point to make is that human consciousness and language is embodied: we think about the world and other people in it while existing in that world. Communication happens between beings who have roughly the same existence. We all need to eat, we all get lonely. We all have people that we love.

Our brains evolved to support our embodied selves in a physical world. That’s what they’re for. The parts of the brain we think of as “conscious” or “higher cognition” are deeply driven by our physiological and emotional reactions to the world.

This is something you can talk about at an even lower orbit of abstraction, in the precise terms of neuroscience. The parts of our reasoning that we are conscious of—the production of language, communication with and inferences about others—interact with our pre-conscious, embodied selves via a chemical signaling system. The chemicals that do the signaling are called modulatory neurotransmitters. These neurotransmitters—chemicals in the brain with names like serotonin, norepinephrine, dopamine—selectively promote and depress the activity of different parts of our brain based on information about the state of different, pre-conscious or unconscious parts of our nervous system. When we realize that we are lashing out at somebody because we're hangry, say, losing our temper because we need to eat, that is us realizing something about this signaling system. We’re applying our metacognition—our ability to think about ourselves—to the interaction of our conscious sense of the world and our body's pre-conscious ability to know that it needs food. That interaction is modulated by the operation of this signaling system, comprising mostly the various modulatory neurotransmitters, on all parts of the brain. These modulatory neurotransmitters are constantly flowing throughout our brain and greater nervous system, activating certain areas and depressing others. They ebb and flow in response to our overall physiological and emotional state, drawing information and influence from the whole body, no matter how peripheral. They are the shifting headwaters from which decision-making, communication, and all other interactions flow. To put it another way, the collective activity of modulatory neurotransmitters on different parts of our brain forms the substrate on which our intelligence and linguistic faculties are constructed. That activity gives our consciousness its direction and shape. The neuroscientist Antonio Damasio describes the human ability to use this complex cocktail of communicated physiological information in terms of "somatic markers": his somatic marker hypothesis has tremendously advanced our understanding of the ways that cognition can go wrong when these signals are not present, or are garbled.

Large language models lack this substrate. They are not embodied—they do not have a persistent existence in a rich world in any kind of comprehensible way—and they do not have physiological needs. They are not designed to have anything like the rich substrate of emotional valence, physiological need and desire that is produced by our modulatory neurotransmitter system. To go back to (my simplistic paraphrase of) J.J. Gibson's framing, they don't have the things that language and intelligence are for.

That these models are nonetheless so remarkably facile with language is genuinely exciting and is causing much reconsideration of fundamental ideas in linguistics. When it comes to the question of whether these models are on the path to artificial general intelligence, though, I think their lack of a substrate of valence and desire rich enough to match the one that undergirds human cognition is probably the right place to start. This is not how machine learning researchers generally like to think about future progress. They are fond of talking about the "Bitter Lesson" of ML, which is that methods which embed more understanding of the world—and more elaborate models of human cognition—almost always perform worse on real-world tasks than models which simply rely on more and more training data. It is, as an empirical matter, true enough, and a useful counter-argument to keep in mind when, like me, you spend a lot of time thinking about the ways that machine learning models and humans are different. I think, however, there's a way to reframe the gap between LLMs and AGI that does not ignore the lesson, and it goes back to J.J. Gibson: the question that LLMs are answering ("what's the thing to say in response to a human that the human will find the most correct") is wildly different from—and much simpler than—the question that human intelligence evolved to solve: "how do I keep this collection of human needs and neuroses fed and alive and reproducing in a world where it makes sense to trust and work with others". That latter question is not well-formed from a computer science perspective. Coming up with a more tractable but similarly rich and complex question to ask of our ML systems is fantastically difficult in ways we only barely understand. That is the entirely unsolved challenge that lies between us and the dawn of AGI.

So if that's true—if the path from today's large language models to artificial general intelligence involves so huge and unexamined a barrier—then why is Sam Altman talking in very serious tones about how OpenAI is preparing for the onset of AGI? I think that the idea of AGI is helpful to Sam Altman and OpenAI in a very similar way to the way that the trolley problem is helpful to people who are building self-driving cars. If you orient your concerns about artificial intelligence as it exists today around the problems that it might introduce if it ever becomes superhumanly omnicompetent, you pay less attention to the ways that it is ineffective and even dangerous today. A focus on AGI lets people assume that the level of facility of today's machine learning—or "AI"—systems is far, far greater than it actually is. Large language models today are dangerous because they can’t be trusted. They are dangerous because they produce believable misinformation as a greater scale that was ever possible before. With AGI, those problems per se no longer exist: a system as competent and intelligent as humans across every possible application of human cognition will of course not be an accidental misinformation machine. If the biggest issue with today's large language models, as Altman implies, that they might soon become far too capable, then the problems that afflict them today are almost incidental.

In truth, the problems that afflict large language models today are deeply related to the ways that those models are not—and will not be, in anything like their current form—artificial general intelligence, or even artificial intelligence as it is broadly understood. Thought experiments about superintelligent future AI and its effect on society obscure that point, like trolley problem-style experiments obscure the very real present-day problems with autonomous cars. We shouldn’t let ourselves be distracted by evocative visions of a troubled but transformed future while systems that are dangerously unready for the purposes to which they are being put are widely deployed by secret-keeping, profit-seeking enterprises.